When you are working with API’s and Logic Apps and there is lots of rows of data involved you will sometimes come up with the following problems:

- An API often pages the data once you go beyond a certain number of records

-

When you want to insert lots of rows with a Logic App into SQL you will usually have a loop which iterates over a dataset and does inserts

- This takes a long time to execute

- There is a cost implication to your implementation when you pay for each action

I recently had a scenario in this space and used quite a cool approach to solve the problem which I wanted to share.

Scenario

The scenario I had started in Shopify. When I add products & collections to my online store in Shopify I wanted to have a daily extract from Shopify to synchronise these new product/collections to my Azure SQL database which I use for reporting with Power BI.

To achieve this I would have a Logic App with a nightly trigger which would take the following actions:

- Clean the table of which product is in which collection

- Extract all products in collections via the Shopify API

- Insert them all into the SQL table

The end result is I have a table which has all of the products in each collection listed for my analysis.

At a high level the scenario looks like the below diagram:

Implementation

As I mentioned above the problem is two folded here, when we query Shopify there many be thousands of products so we need to use a paging approach to query their API, secondly I want to insert into SQL in batches to try to minimise the number of action calls on SQL to improve performance and reduce cost.

Lets look at how I did this.

Paging API calls to Shopify

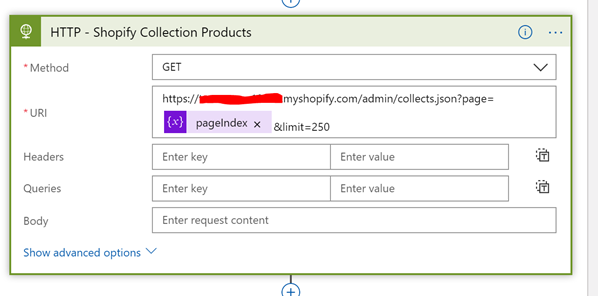

When it comes to the Shopify API you are able to execute a GET operation against the collection and it will return the products within it. If you have lots of products you can get them in pages. I chose to get 250 per time and you need to pass a page index to the API as a query parameter. The below picture shows you what a call to Shopify would look like with the paging parameters set.

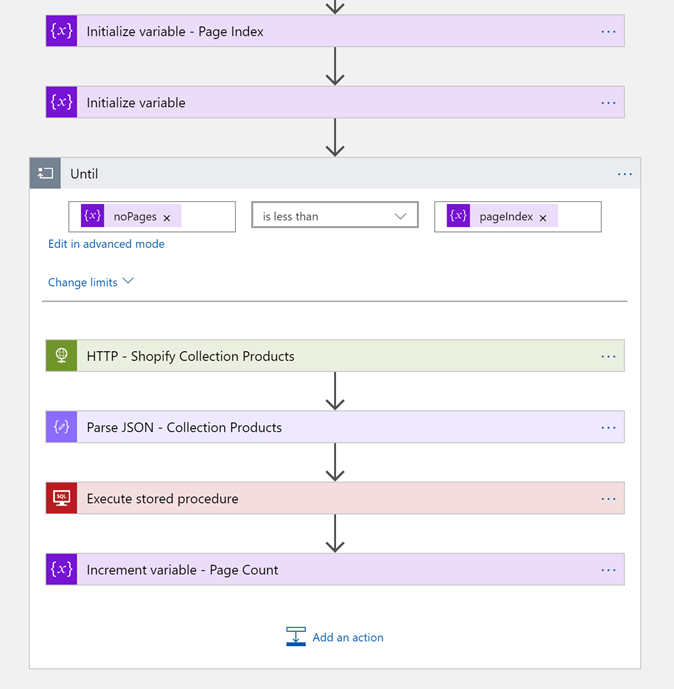

Once I can make this single call I can then use a loop around the call to Shopify, but before I do this I need to know how many pages there are. I can do this by executing a GET against the collections API with the count extension on the url. This will return me the number of products in collections. You can see this below.

From the response I can parse the count and then I would set a variable which is the number of pages which I will work out with a calculation of dividing the number of products by the number of products I will get per page. I will also add 1 to this so I get 1 more page than the count incase the division is not a whole number. The calculation is shown below.

add(div(body(‘Parse_JSON_-_Count’)?[‘count’], 250),1)

Now I know the number of pages I can implement the loop where I will increment the page index each time until we have matched the number of pages. Within the loop we will get the next page of data from the API as shown in the picture below.

SQL Json Insert

It would be possible to just call the insert action for SQL in the logic app but if there are say 10000 products then the loop will do 10000 iterations which will take quite a while to run and also there is a cost associated with that. I wanted to look at options for inserting the data in batches. If I could insert the entire page returned from the API as a batch then with my 250 records at a time I could reduce the 10000 iterations down to 40. That should be a lot less time and a much lower cost.

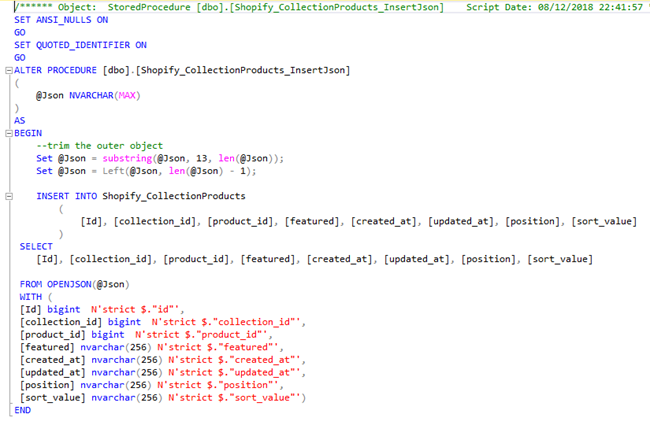

To do this I developed a stored procedure where I passed the entire JSON string from the API response to the stored procedure as an NVARCHAR(max) parameter. In the stored procedure I was fortunate that the format of the json in this case was very table/row like making it easy to do this insert. I used SQL’s OPENJSON feature and was able to insert the entire page of data from the API in a simple insert statement as you can see in the SQL below.

Summary

Once it was all put together I was able to run my Logic App to refresh my SQL database each night and the process took 10 seconds to copy across 2500 records. This took 10 iterations of the loop.

That’s a nice and easy to support and run Logic App which does a nice job in this case.